Hi everyone,

I’m taking a brief detour from our usual service today for a few tips about AI, Large Language Models like ChatGPT and how we might use them in our daily work. I’m going to start with a brief summary of what these LLMs are and how they fit into the bigger picture of machine learning and AI. After that, I’ll give several examples across a range of topics of how ChatGPT responds to questions we might have - and talk about how to optimise the output.

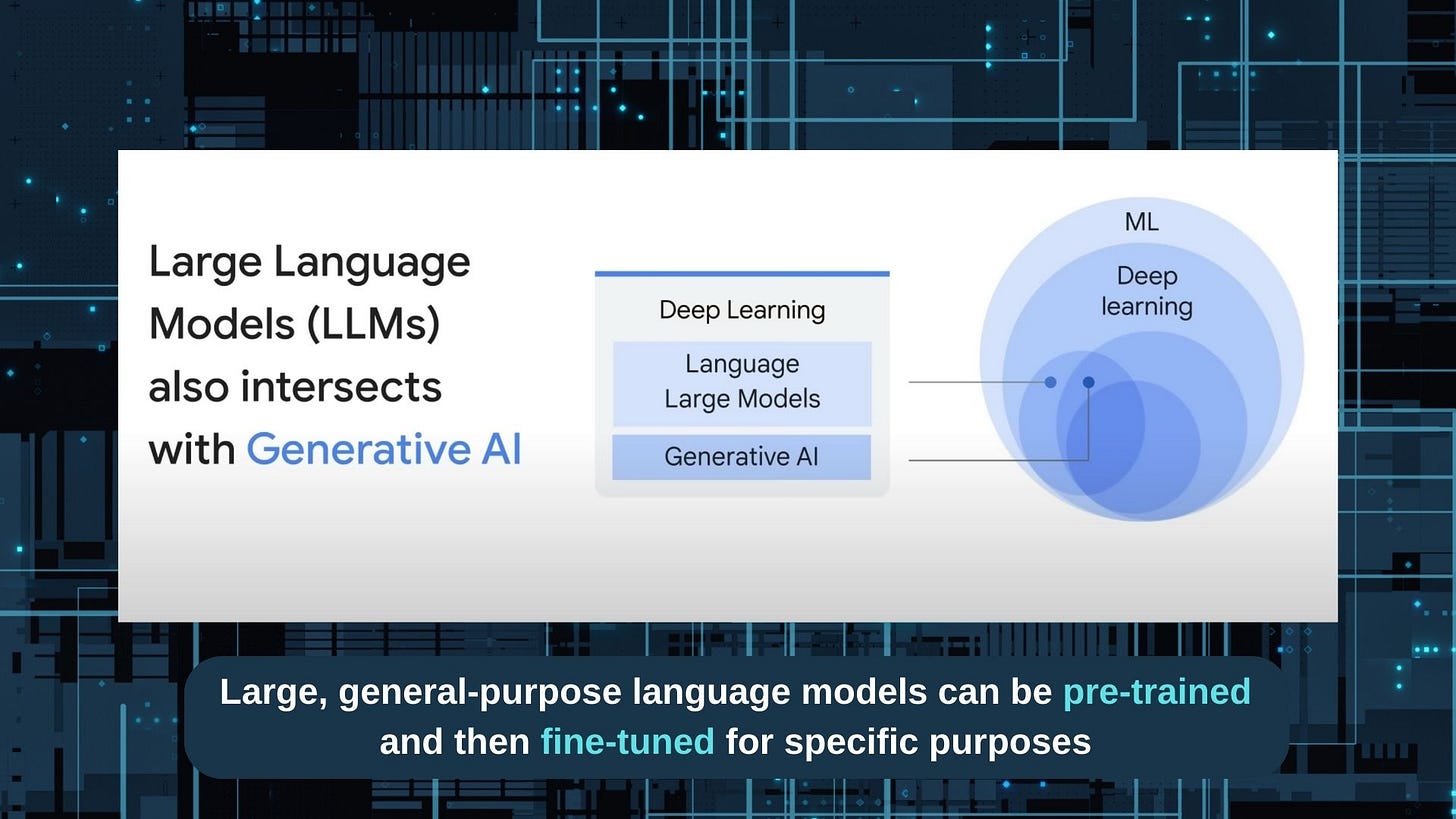

LLMs, such as ChatGPT from the company OpenAI, are a subset of the machine learning category with an intersection across generative AI. Other generative AI projects include those like Midjourney (midjourney.com), the AI art generator.

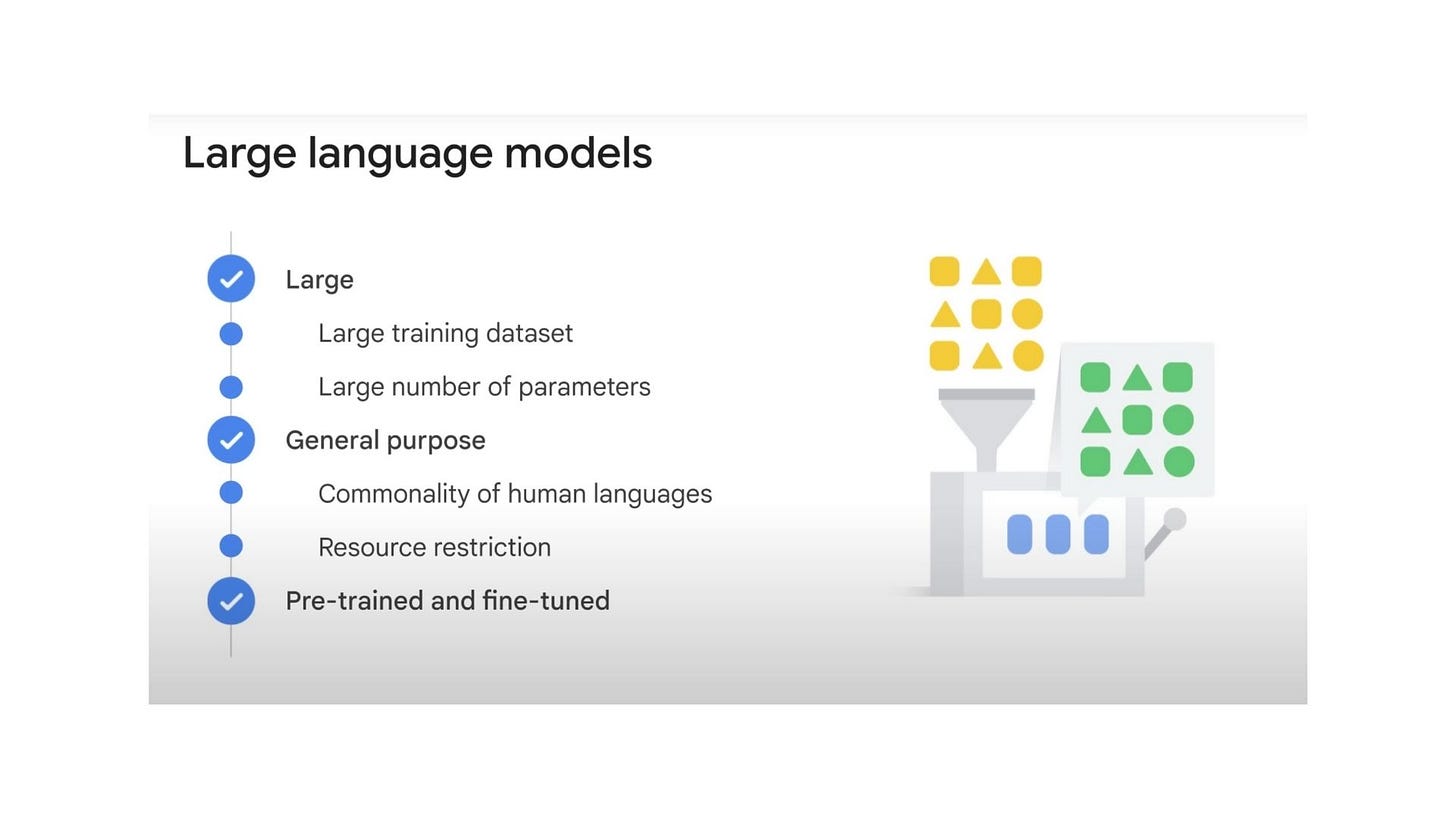

LLMs have been trained on huge datasets - ChatGPT, for example, was trained on a dataset including billions of webpages, full-text books and articles. The key feature of LLMs is that they are trained for natural language input and output.

There are 2 versions of ChatGPT live right now: 3.5 and 4.0 (also known as ChatGPT Plus). Version 3.5 is free and GPT-4 costs US$20 per month. Version 3.5 can consider 175 billion parameters and respond to queries in a very human-like way. GPT-4 had an additional six months of human/AI feedback, is based on a greater amount and more recent training data and is able to consider over 1 trillion parameters when making responses. GPT-4 can craft more nuanced, more accurate responses and is less prone to what OpenAI calls ‘hallucinations’ or false responses.

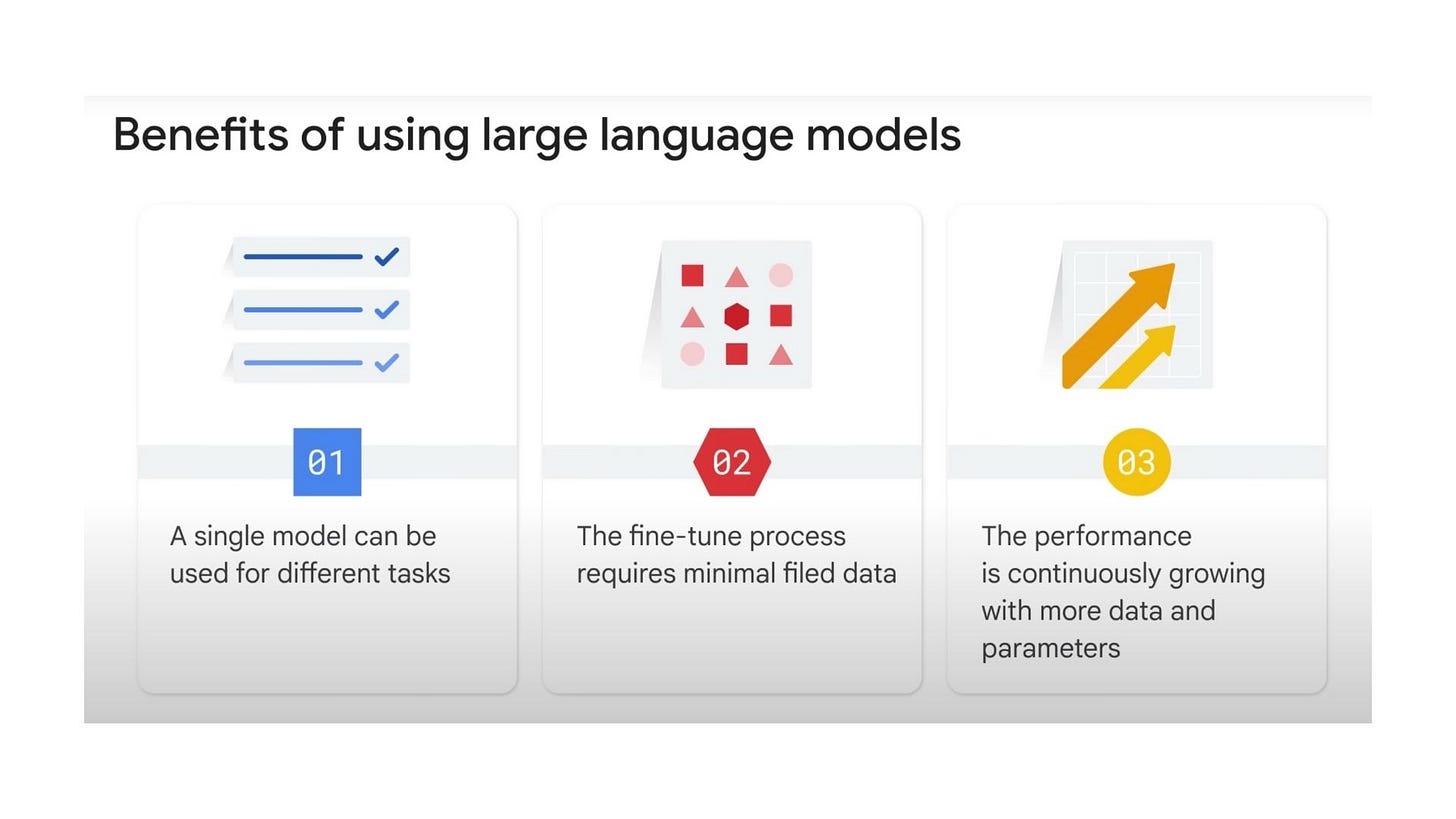

One of the key advantages is that once developed, LLMs can be used for a range of tasks and specialised sub-sets of tasks. Adapting the outputs does not require complex programming, AND the model can keep learning (if you tell it to). Traditional machine learning models are very complex in comparison. The key feature of LLMs is that you need to know about prompt design.

When we do a Google search, we typically throw it some keywords and these generally reflect words that would be found on the page we are looking for. It’s quite simplistic.

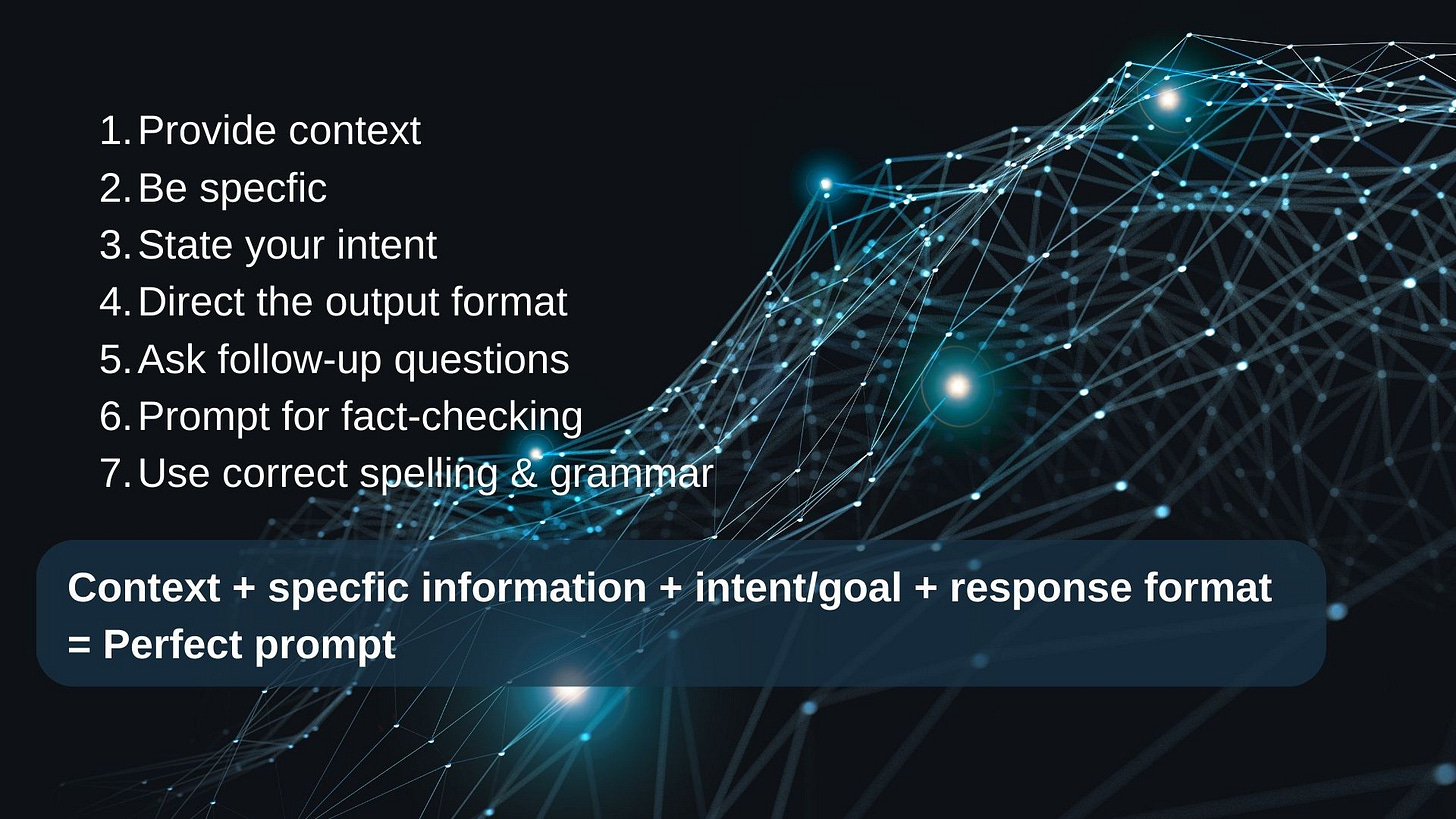

When you use LLMs, you need to think carefully about the prompts you provide because they will determine the quality and structure of the output you receive.

Choice of prompts

There are two approaches used:

Sequential prompts - start broad and hone into what you’re looking for.

Mega-prompts - be very specific from the outset, with fine-tuning at the end.

Generally - and specifically for tasks which you will repeat - mega-prompts are very useful and can be faster.

Another factor is priming - you can tell ChatGPT to look at some information, analyse it, and use its analysis in the subsequent action you’re asking it to take.

I’ve found that the best prompts are ones that include the following 7 features:

Provision of context

Specificity

Statements of intent

Direction to the output format

Follow-up questions to fine-tune

Prompts for fact-checking (ask ChatGPT to check its own answers or to consider various alternatives and then compare them to the initial response)

Use of correct spelling and grammar.

Security

Before starting, just to be clear, you should never include the personal information of others (e.g., patients) in LLMs. Also, under Settings>Data controls, you can tell ChatGPT NOT to remember your conversation and to NOT use it for model training or improvement.

Examples of use

Here are some examples to demonstrate:

I had a patient for whom I did a thrombophilia screen, and she returned positive for Factor V Leiden. I wasn’t going to see her for a while and needed to let her know the result and the implications. The first prompt was broad and less helpful (although factually correct).

Prompt 1

What does factor V Leiden heterozygote mean?

Prompt 2

I am a doctor who needs to write an email to my patient about her Factor V Leiden test result. This showed that she is a Factor V Leiden heterozygote. Please write an email explaining the implications of this to the patient. Please use grade 9 language to improve readability and explain any medical terms. Use bullet points where appropriate and British English spelling. Let the patient know that their GP has a copy of the result, too, in case she sees them first.

Here’s the response to version 2. As you can see, it’s not perfect and would absolutely need adjusting for the circumstance, but it’s a great start!

Fine-tuning

The key here is that I gave the AI context and statements of intent. I was specific in what I asked it to do and directed the output clearly. If you didn’t include all of these in the initial prompt, you can add follow-ups like:

Please change to British English spelling

Make the text 50% easier to read

Increase complexity by 100%

Rewrite with an explanation of complex medical terms, etc.

More examples

What else can you use it for? These are all first prompt answers without any additional context or questioning.

Ideas for research/audit in the workplace

Exam prep or test your knowledge on a subject

Career advice

Personal development

Case reflection and support (note: topic is pregnancy loss)

Link to example prompts and GPT4 responses

Priming

Finally, I mentioned priming before. Here’s what a priming prompt might look like:

I’m going to give you 3 pieces of text. After each input, please acknowledge you have received it by responding ‘Received, please enter next’. After the third entry, ask me for more instructions.

[You paste in 3 pieces of writing that are in the style you want the output to match - e.g. your typical writing style, letters, articles, blog post, etc.]

Instruction: Please analyse the writing style of these 3 texts and summarise what you find. After that, I’m going to ask you to create some material in the style of the text already entered.

Pretend you are the best-selling author of books about medical conditions for the public. Use your writing and editorial skills to craft a 500-word text about X. Use British English spelling and target the output for broad consumer reading. Use bullet points if you think it will improve readability.

I haven’t got an output example for this, but as you can see, you can use sequential instructions to make your prompts shorter and also allow you to overcome limits on input length.

If you ask for a longer output, sometimes it stalls, and you just have to prompt it with ‘continue’ or ‘keep going’.

Finally

I hope that gives you some ideas about how to use ChatGPT more effectively. Add your thoughts here - I recognise this stuff can be pretty controversial! But if we’re not at the table, we cannot complain about how medicine and healthcare organisations adopt or reject new technologies. A couple of other AI services I use:

Cheers,

Danny

PS. Research about LLM use in healthcare was published in Nature journal 2 days ago: ‘'AI doctor' better at predicting patient outcomes, including death’

‘Overall, NYUTron identified an unnerving 95 percent of people who died in hospital before they were discharged, and 80 percent of patients who would be readmitted within 30 days.’